My earlier post here, I introduce us to the phenomenon of the Federal customer requiring a story point quota per sprint or release, in part, as a measure of progress/value delivery; and a brief overview of story points. My previous post here, tries to make the point that value measurement is hard, and that story points and velocity is best suited for the calibration of the team and how they operate within the ‘system’ that is creating value, not the amount of value delivered. Now, I will attempt to draw a few conclusions in this post.

Ultimately, the goal of building these software solutions is to provide something of value to the customer. Mark Schwarz, the former CIO at USCIS, posted a blog on LinkedIn related to the notion of using BI to evaluate the ‘value’ delivered since the stories, points, velocity are all leading indicators to the ultimate trailing indicator, of outcomes delivered. Mark’s Post on LinkedIn.

Mark has an interesting idea, but it is very focused only on the product itself. Which is ultimately the end goal, sort of the lagging indicator. But what are some of the leading indicators? Are we measuring how we get to that end goal, let alone can we measure how effective we are with the end goal.

This leads me to believe that in order to measure value delivery, it is a bigger challenge than just measuring customer satisfaction. I think there are 3 different areas that tend to get merged into a single idea or thought, and they are 1) Measuring progress/productivity/activity vs. 2)Measuring product Value vs. 3) Measuring ROI related to the agile initiative. Let’s expand on each below:

1 – Measuring progress/productivity/activity – Try to turn this into measuring ‘flow’. How productive is our ‘system’ for building software? Are we making adjustments to the myriad of factors to try to improve the team(s) ability to reliably produce quality software? Velocity is that tool to provide us insight into the effectiveness of the team(s) at a sprint and release level and tells us a story. If we are outside the ‘norms’, then look into why. Ask the questions below the surface. What changed? What was revealed as a contributor to the exceptional velocity or the stunted velocity. What ‘experiment’ can we perform to see if we can make the exceptional velocity remain, or improve the stunted velocity going forward?

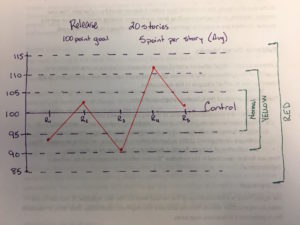

If there is no way around measuring a story point quota, then one recommendation we are trying is to create an Upper/Lower Limit control chart. The control value is what we think the team can deliver from a story point perspective for the Release. Then, we will take the average story size, in points and consider that a Standard Deviation. Let’s say that for the Release, the team is planning to delivery 100 story points. This is based on sound judgement, preferably from historical or empirical data. For this example, we will say that they have 20 stories planned which gives up an average story size of 5 points per story. So – One standard deviation is +/- 5 points, Two SDs is +/- 10 points, and Three SDs is +/- 15 points. If at the end of the release the team falls within 1 SD, then that is considered normal operations. If they fall between 1 and 2 SDs, we would raise a ‘yellow’ flag and investigate why this occurred and prepare ‘experiments’ to improve the flow if we scored low, or maintain the flow if we scored high. If we are beyond 2 SDs, we want to raise a ‘red’ flag and investigate vigorously into why. Here is an example:

2 – Measure Product Value – You have to get the customer involved. The Scaled Agile Framework talks about assigning business value (0-10) for each objective that each team has identified during the Product Increment Planning event. Then, at the end of the Product Increment, the business re-scores what was delivered from a business value perspective. Although this sounds subjective, it can be a consistent tool for evaluating the ‘Value’ that is being produced on a regular basis. On the surface, with no data to start, it may seem arbitrary. But after a few releases, we will start to see a trend and a set of data will become a baseline that we can evaluate ourselves against.

The Scaled Agile Framework has a very good abstract related to this concept. Even if you are not following SAFe, this is a reasonable approach.

3 – Measuring ROI – Another interesting aspect is the notion of the Return of Investment related to the organization’s desire to transition to Agile. Stephen Day wrote an excellent post regarding the irony of an organization’s desire to move to Agile, yet not really committing to the foundational changes in operations that will really result in a positive ROI. So they end up making a substantial investment in coaches, training, and ceremony; yet, fundamentally never really move away from their standard approaches, processes, and measures. They end up in this limbo area where they are trying to do both, and not doing either very well. As if they lost the “Why” regarding their move to Agile. If we don’t have a strong sense of “why”, then there is probably no reason to change how your are currently operating. Save yourself the expense (money, time, confusion, overhead) and stick to your current processes. However, if you have a “Why”, then you need to evaluate the organization in how they are doing. You have to measure their progress, evaluate areas for improvement, and organize around making those improvements. It is this area that I see many in the Federal space not actively doing this work. They are looking at Agile as simply another process. A set of steps that you follow, leverage the same measures and practices like setting quotas for story points per sprint or release, and engaging in the folly of “Doing Agile” versus finding ways to “Be Agile”.

There are several ‘Assessment’ tools out there that could be a starting point to evaluate your ROI. I came across this paper at Scrum.Org that is developed by Ken Schwaber called, “The Agility Guide to Evidence-Based Change“. In my opinion, the paper spends 3/4 of the document on describing Scrum roles and processes in the context of using Scrum as the method to manage a change initiative, and very little in regards to measures, tactics, or techniques. Conceptually, the ideas here are good, but it can come across confusing as they take a Scrum approach to manage their Evidence-Based Change approach. A picture would have gone a long way to help. Bottom line, is that you have to assess your organization across a variety of me

How do we measure value and outcomes?

For some projects, perhaps the measure is in reduced Operations & Maintenance costs. The folks over at 18F, Chris Cairns and Robert Read have a series post regarding an approach here. At a high enough level of abstraction, the ‘share in the savings’ model sounds good, but as you dig into the details it doesn’t hold up yet.

So perhaps there are several ‘metrics’ that in combination help us to evaluate if value is being delivered.

Project Visibility: Release and Sprint burndowns show activity. Perhaps predictability as in the average velocity and understanding run rates also provides visibility into the performance.

Outcomes: Running Tested Features (RTF) starts to bridge towards an outcomes measure where we are reflecting on the ratios of outstanding features with the set of features completed and passing automated tests.

Customer Surveys: Release level surveys should be conducted to evaluate the customer’s sentiment on the progress towards the objectives and outcomes of the program. A full blown, detailed survey may be interested, but odds are you will get limited participation over time. However, perhaps a simplified, structured Net Promoter Score type survey that is poignantly focused on value received.

Perhaps something like this:

- Based on today’s sprint review, would you characterize the outcomes for this sprint as having met your expectations?

- Based on today’s release review, would you characterize the outcomes for this release as having met your expectations?

With the Net Promoter Score model, evaluators are responding on a scale of 0-10. Responses 0-6 are detractors, 7 & 8 are passive, and 9 & 10 are promoters. Essentially you take the percentage of promoters, minus the percentage of detractors, and that gives you your Net Promoter Score. You can track these scores over time and begin to see a trend.

Question: The more I dug into this topic, the more I realized there is not one right answer. Have you faced a similar challenge? What approaches have you taken? You can leave a comment by clicking here.